Link:

https://dannyman.toldme.com/2011/07/05/wordpress-upgrade-php53-centos/

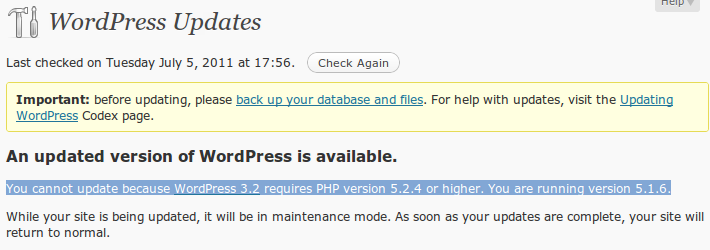

If you have a self-hosted WordPress blog, you really ought to keep it up to date. Popular software is a popular security target, and as new exploits are discovered, new patches are deployed. Fortunately, WordPress makes this super-easy. Just go to Dashboard > Updates and you can update with one click. I basically get a free update any time I get it in my head to write something.

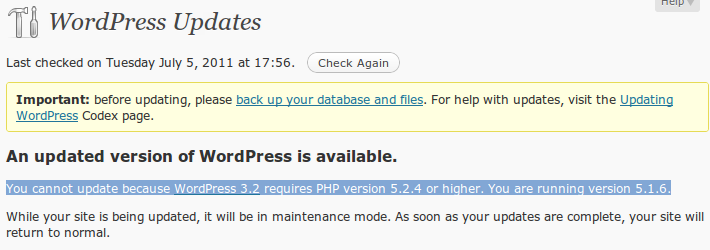

Except this morning, when I was told that an update was available, but:

On my CentOS VM, this was addressed by:

sudo yum update

sudo service httpd restart

Actually, it was a little difficult, because we’re replacing php with php53:

0-13:11 djh@www0 ~$ cat /etc/redhat-release

CentOS release 5.6 (Final)

0-13:11 djh@www0 ~$ rpm -q php

php-5.1.6-27.el5_5.3

0-13:11 djh@www0 ~$ yum list installed | grep ^php

php.x86_64 5.1.6-27.el5_5.3 installed

php-cli.x86_64 5.1.6-27.el5_5.3 installed

php-common.x86_64 5.1.6-27.el5_5.3 installed

php-gd.x86_64 5.1.6-27.el5_5.3 installed

php-mysql.x86_64 5.1.6-27.el5_5.3 installed

php-pdo.x86_64 5.1.6-27.el5_5.3 installed

1-13:11 djh@www0 ~$ sudo service httpd stop

Stopping httpd: [ OK ]

0-13:11 djh@www0 ~$ yum list installed | grep ^php | awk '{print $1}'

php.x86_64

php-cli.x86_64

php-common.x86_64

php-gd.x86_64

php-mysql.x86_64

php-pdo.x86_64

0-13:12 djh@www0 ~$ sudo yum remove `!!`

sudo yum remove `yum list installed | grep ^php | awk '{print $1}'`

Loaded plugins: fastestmirror

Setting up Remove Process

Resolving Dependencies

--> Running transaction check

---> Package php.x86_64 0:5.1.6-27.el5_5.3 set to be erased

---> Package php-cli.x86_64 0:5.1.6-27.el5_5.3 set to be erased

---> Package php-common.x86_64 0:5.1.6-27.el5_5.3 set to be erased

---> Package php-gd.x86_64 0:5.1.6-27.el5_5.3 set to be erased

---> Package php-mysql.x86_64 0:5.1.6-27.el5_5.3 set to be erased

---> Package php-pdo.x86_64 0:5.1.6-27.el5_5.3 set to be erased

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Removing:

php x86_64 5.1.6-27.el5_5.3 installed 6.2 M

php-cli x86_64 5.1.6-27.el5_5.3 installed 5.3 M

php-common x86_64 5.1.6-27.el5_5.3 installed 397 k

php-gd x86_64 5.1.6-27.el5_5.3 installed 333 k

php-mysql x86_64 5.1.6-27.el5_5.3 installed 196 k

php-pdo x86_64 5.1.6-27.el5_5.3 installed 114 k

Transaction Summary

================================================================================

Remove 6 Package(s)

Reinstall 0 Package(s)

Downgrade 0 Package(s)

Is this ok [y/N]: y

Downloading Packages:

Running rpm_check_debug

Running Transaction Test

Finished Transaction Test

Transaction Test Succeeded

Running Transaction

Erasing : php-gd 1/6

Erasing : php 2/6

Erasing : php-mysql 3/6

Erasing : php-cli 4/6

Erasing : php-common 5/6

warning: /etc/php.ini saved as /etc/php.ini.rpmsave

Erasing : php-pdo 6/6

Removed:

php.x86_64 0:5.1.6-27.el5_5.3 php-cli.x86_64 0:5.1.6-27.el5_5.3

php-common.x86_64 0:5.1.6-27.el5_5.3 php-gd.x86_64 0:5.1.6-27.el5_5.3

php-mysql.x86_64 0:5.1.6-27.el5_5.3 php-pdo.x86_64 0:5.1.6-27.el5_5.3

Complete!

0-13:13 djh@www0 ~$ sudo yum install php53 php53-mysql php53-gd

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: yum.singlehop.com

* epel: mirror.steadfast.net

* extras: mirror.fdcservers.net

* updates: mirror.sanctuaryhost.com

Setting up Install Process

Resolving Dependencies

--> Running transaction check

---> Package php53.x86_64 0:5.3.3-1.el5_6.1 set to be updated

--> Processing Dependency: php53-cli = 5.3.3-1.el5_6.1 for package: php53

--> Processing Dependency: php53-common = 5.3.3-1.el5_6.1 for package: php53

---> Package php53-gd.x86_64 0:5.3.3-1.el5_6.1 set to be updated

--> Processing Dependency: libXpm.so.4()(64bit) for package: php53-gd

---> Package php53-mysql.x86_64 0:5.3.3-1.el5_6.1 set to be updated

--> Processing Dependency: php53-pdo for package: php53-mysql

--> Running transaction check

---> Package libXpm.x86_64 0:3.5.5-3 set to be updated

---> Package php53-cli.x86_64 0:5.3.3-1.el5_6.1 set to be updated

---> Package php53-common.x86_64 0:5.3.3-1.el5_6.1 set to be updated

---> Package php53-pdo.x86_64 0:5.3.3-1.el5_6.1 set to be updated

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

php53 x86_64 5.3.3-1.el5_6.1 updates 1.3 M

php53-gd x86_64 5.3.3-1.el5_6.1 updates 109 k

php53-mysql x86_64 5.3.3-1.el5_6.1 updates 92 k

Installing for dependencies:

libXpm x86_64 3.5.5-3 base 44 k

php53-cli x86_64 5.3.3-1.el5_6.1 updates 2.4 M

php53-common x86_64 5.3.3-1.el5_6.1 updates 605 k

php53-pdo x86_64 5.3.3-1.el5_6.1 updates 67 k

Transaction Summary

================================================================================

Install 7 Package(s)

Upgrade 0 Package(s)

Total download size: 4.6 M

Is this ok [y/N]: y

Downloading Packages:

(1/7): libXpm-3.5.5-3.x86_64.rpm | 44 kB 00:00

(2/7): php53-pdo-5.3.3-1.el5_6.1.x86_64.rpm | 67 kB 00:00

(3/7): php53-mysql-5.3.3-1.el5_6.1.x86_64.rpm | 92 kB 00:00

(4/7): php53-gd-5.3.3-1.el5_6.1.x86_64.rpm | 109 kB 00:00

(5/7): php53-common-5.3.3-1.el5_6.1.x86_64.rpm | 605 kB 00:00

(6/7): php53-5.3.3-1.el5_6.1.x86_64.rpm | 1.3 MB 00:00

(7/7): php53-cli-5.3.3-1.el5_6.1.x86_64.rpm | 2.4 MB 00:00

--------------------------------------------------------------------------------

Total 12 MB/s | 4.6 MB 00:00

Running rpm_check_debug

Running Transaction Test

Finished Transaction Test

Transaction Test Succeeded

Running Transaction

Installing : php53-common 1/7

Installing : php53-pdo 2/7

Installing : php53-cli 3/7

Installing : libXpm 4/7

Installing : php53 5/7

Installing : php53-mysql 6/7

Installing : php53-gd 7/7

Installed:

php53.x86_64 0:5.3.3-1.el5_6.1 php53-gd.x86_64 0:5.3.3-1.el5_6.1

php53-mysql.x86_64 0:5.3.3-1.el5_6.1

Dependency Installed:

libXpm.x86_64 0:3.5.5-3 php53-cli.x86_64 0:5.3.3-1.el5_6.1

php53-common.x86_64 0:5.3.3-1.el5_6.1 php53-pdo.x86_64 0:5.3.3-1.el5_6.1

Complete!

0-13:14 djh@www0 ~$ sudo service httpd start

Starting httpd: [ OK ]

And now I have successfully upgraded via the web UI.

Most days, I am not a CentOS admin, so if there is a better way to have done this, I am keen to hear.

8 Comments

Link:

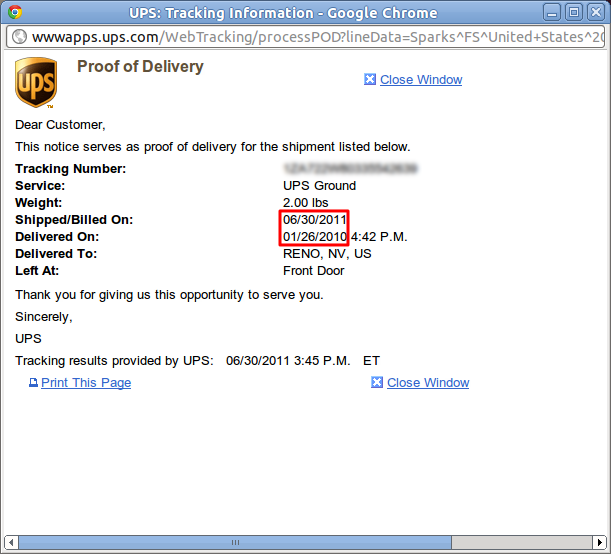

https://dannyman.toldme.com/2011/06/30/ups-tracking-number-rollover/

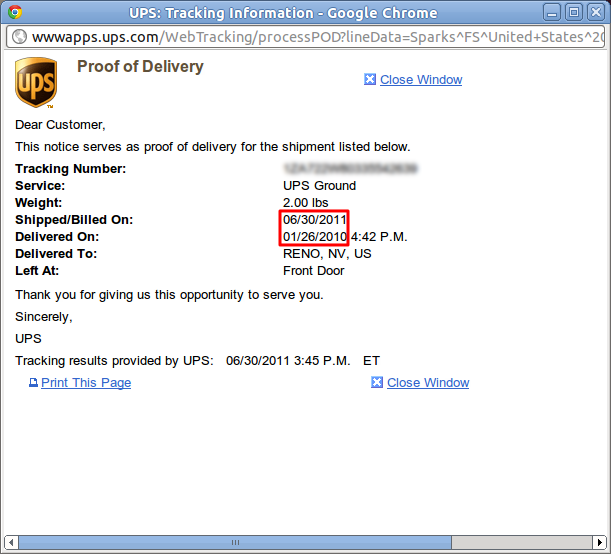

My package was shipped today. It arrived last January.

Feedback Welcome

Link:

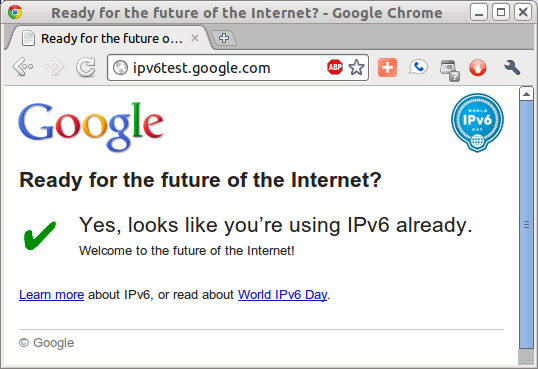

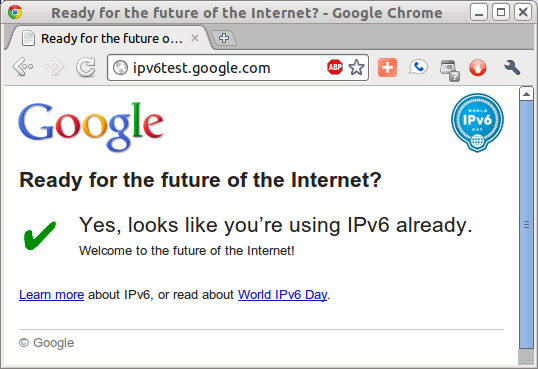

https://dannyman.toldme.com/2011/06/08/can-haz-ipv6/

SysAdmins, check with Tom.

My employer (Cisco) makes IPv6 available internally on a test basis. Once I configured the isatap hostname on my system, all I had to do to get my Ubuntu laptop on IPv6 was:

sudo apt-get install isatapd

I look forward to trying this on a few more systems:

sudo apt-get install isatapd && ping6 -c 1 www.ipv6.cisco.com && figlet -c 'I CAN HAZ IPv6!!'

Feedback Welcome

Link:

https://dannyman.toldme.com/2011/06/02/i-wallace/

Last week I enjoyed a great story by Rands, who, as a team lead, had a total communication disconnect with one of his team members. While he enjoyed an easy rapport with Harold and Stan, he just wasn’t clicking with Wallace. At first he accepted things as they were, but soon learned that the disconnect with Wallace was a genuine problem in need of improvement. Rands concluded that the only thing to do with Wallace was to completely unwind his normal assumptions about rapport and “clicking” with a colleague and just get down to making basic communication work. This can be pain-stakingly frustrating, but this is what you need to do when you’re not getting the easy, intuitive connection you want with someone you rely on.

One of the comments (Harry) chided Rands: “Here’s the deal: if your boss asks you to lead, he either gives you the power to sack people, or you don’t accept his job offer. In your case, Wallace is obviously not compatible with you. So you sack him.”

I thought “No–Good engineers are expensive, and it is preferable to learn to steer an existing engineer in the right direction rather than finding and training a replacement.” Other comments pointed out that Wallace wasn’t incompetent or incapable, just that he needed clear expectations with management. Someone named Dave chimed in:

“I’ve been a Wallace, and I’ve also been a Harold, and from my standpoint it has less to do with personality than with context. You can have a poor team dynamic, with no clear leadership and constantly-shifting goals, where everybody ends up isolated in their corner and becoming Wallace, at least in part. Or you can have a good team, where even the most Wallace-y engineer becomes Harold for at least a few hours each day.”

Amen. We each have in us both a Wallace and a Harold. They are Yin and Yang. The Wallace side of my personality wants to get heads down in to the work, but needs to know what to work on. The Harold side takes some time to chat up his manager and coworkers to find work and set priorities, then steps aside and lets Wallace get back to work. Tech workers tend to be more innately introverted, they tend to want a good manager to play the part of Harold, and come back and set clear work objectives and priorities. When things are not going well, unhappy people will tend to revert to their base personalities. For engineers this often means getting stuck in Wallace mode.

Sometimes employees are happy and eager, and sometimes they are curmudgeons. It depends on the context of work and life morale, mediated by an employee’s emotional intelligence. These are variables that can be influenced, allowing for change over time. Management needs to provide a positive work environment with clear goals. Employees need to do our part in building a positive home environment, with positive life aspirations, while also cultivating a greater degree of self awareness. An employee who learns to steer their own craft and deliver what management wants will create a more positive work environment for their colleagues.

Feedback Welcome

Link:

https://dannyman.toldme.com/2011/05/04/data-center-supplies/

As a Systems Administrator, I have spent my share of time in various data centers. So, I was keen to read Ben Rockwood’s Personal Must-Haves. He wants a Leatherman, a particular mug, a particular water bottle, an iPhone and a particular bar code scanner. Honestly, it kind of felt like reading a marketing advertisement. I like the idea of a bar code scanner that can dump ASCII as if it were a keyboard device:

Your laptop will register it as a keyboard, so when you press the button to scan the contents of the barcode are “typed in” where ever you like, which means you can use it with Excel just as easily as my prefered auditing format, CSV’s created in vi.

I explained that I keep my liquids in the break area, so I don’t need any fancy mugs. And I don’t know if an iPhone supports making calls over wifi, which is important because mobile signal is often poor to none in a datacenter, nor have I any idea if the camera is all that handy for quick, low-light macro shots.

My own list would include:

In the Cage

- A proper toolbox

- An inventory of cables in a variety of sizes that match your color scheme.

- Velcro cable ties

- Label maker

- A USB DVD-ROM/CD burner

- Some blank DVDs and CDs

- Some USB memory sticks

- Spare server parts

In the Cage – Networking and Comunications

- Wifi access point to a DMZ or sandbox.

- A specific port on an Ethernet switch configured for the guest VLAN, and a long, loudly-colored cable reserved for connecting to it.

- Dynamic DHCP (Seriously: sometimes your NetOps people don’t grok the convenience of DHCP . . .)

- A terrestrial VoIP phone with a very long cable.

In the Cage – Human Sanity

- Noise-cancelling headphones

- Earplugs

- A pocket camera, stashed in the toolbox, which can take good close pictures in low light with minimal shutter lag.

- A power brick for your IT-issue laptop. (Especially if the cage is DC power!)

- A sweater or jacket.

I like wearing the earplugs, then earbuds under noise-cancelling headphones, or over-the-ear earphones. You block out most of the noise and can enjoy some tunes while doing what is often non-thinking physical labor.

1 Comment

Link:

https://dannyman.toldme.com/2011/04/08/c64/

You never forget your first computer.

For Christmas of 1984, Grandpa gave us a

Commodore 64. A couple years later we

got a disk drive, and eventually we even

had a printer. Before the disk drive we

had to buy programs on cartridge, or

type them in to the basic interpreter

line by line. Mostly I just played

cartridge games.

Eventually we got a modem, and I could

talk to BBSes at 300 baud in 40 glorious

columns. (Most BBSes assumed

80-columns.) I was happier when I got a

1200 baud modem for my Amiga, which

could display 80 columns of text.

In my second year of college I

discovered the joy of C programming on

Unix workstations, which led to my

present career as a Unix SysAdmin. I

spend my days juggling multiple windows

of text, generally at least 80x24. /djh

After reading about the brand new Commodore 64, I downloaded a font from style64.org and played around in my style sheet:

**** COMMODORE 64 BASIC V2 ****

64K RAM SYSTEM 38911 BASIC BYTES FREE

READY.

Here is the stylesheet markup:

/* C= 64 */

@font-face {

font-family: "C64_User_Mono";

src:

url("C64_User_Mono_v1.0-STYLE.ttf")

format("truetype");

}

DIV.c64_screen {

background-color: #75a1ec;

color: #4137cd;

min-height: 25ex;

width: 40em;

padding: 3ex 6em;

margin: 0;

}

.c64 {

font-family: "C64_User_Mono",

monospace;

background-color: #4137cd;

color: #75a1ec;

}

The text is wrapped in:

<div class=”c64_screen”><pre class=”c64″>

</pre></div>

1 Comment

Link:

https://dannyman.toldme.com/2011/03/23/flickr-to-smugmug-nah/

I have been concerned that as Yahoo decays, that Flickr may at some point no longer remain a good place to host my photos. I do wish someone would create a competing service which supported the API. Some kid made Zooomr a few years ago, which was to sport a feature-complete Flickr API, but as best I can tell the kid moved to Japan and lost interest in Zoomr, which remains an abandoned stepchild.

Picasa? The desktop client is kind of neat but I don’t much like the web interface. It feels like another one of those one-offs Google bought but then had no idea what to do with it. Anyway, it’s just not my thing.

So, I took a look at SmugMug, who have been trying to lure Flickr refugees, but the consensus seems to be that if you like Flickr, SmugMug can not approximate Flickr. (The biggest concern for me is the loss of the “title” attribute. I’ve got 7,500 images online acquired over a decade . . .)

This is disappointing, because I like SmugMug’s promise of customization, and I have never been afraid to roll my arms up to hack on templates, HTML, and CSS to achieve my desires. Perhaps in the next few years SmugMug will become a little more flexible such that it can easily achieve what I want:

- Individual pages for my photos

- Support for a “title” attribute

- An ability to browse title/descriptions (Flickr “detail” view)

Every so often I have this idea that the WordPress Gallery feature should take some steroids and create a friendly, Flickr-API-compatible hosting environment, which you could then customize just as much as you can customize a self-hosted WordPress blog . . . but that is very far beyond my code abilities and free time.

2 Comments

Link:

https://dannyman.toldme.com/2011/03/15/amazon-com-mp3-downloader-ubuntu/

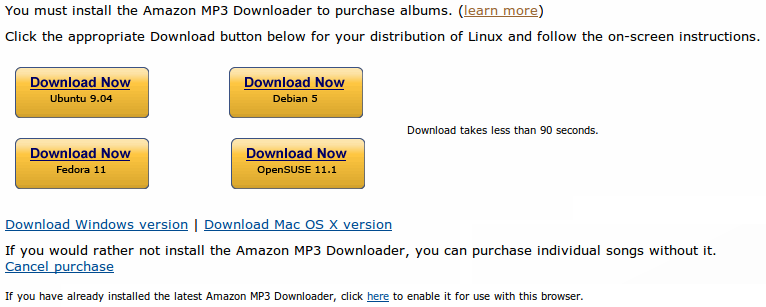

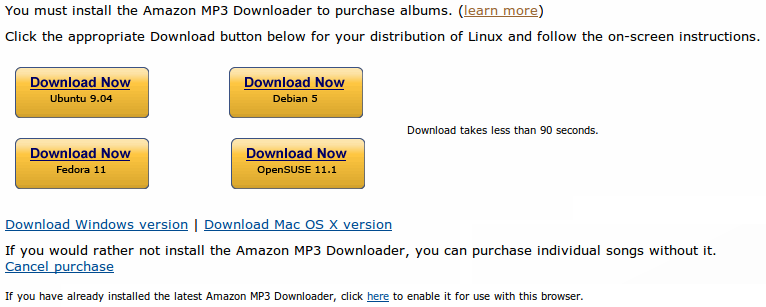

I’ll give Amazon.com credit for making their mp3 downloader available to Linux users:

I clicked on Ubuntu, and Chrome downloaded amazonmp3.deb and the Ubuntu Software Center fired up and told me:

Wrong architecture ‘i386’

That was frustrating. I sent a note to Amazon.com thanking them for their Linux support and asking them to please consider rolling some x86_64 packages. Then I asked Google for advice, and got this fine post:

http://www.ensode.net/roller/dheffelfinger/entry/installing_amazon_mp3_downloader_under

Works fine except the download link to getlibs is old and broken, so I figured I can recapitulate the recipe here:

1) Download amazonmp3.deb and then install it manually:

sudo dpkg -i --force-architecture Downloads/amazonmp3.deb

2) Download and install getlibs: (Thanks for the corrected link, bobxnc!)

http://frozenfox.freehostia.com/cappy/getlibs-all.deb

(Your browser should hand the package off to an installer, else you’ll just do something like sudo dpkg -i Downloads/getlibs-all.deb.)

3) Run getlibs!

Looks something like this:

0-13:08 ~$ sudo getlibs /usr/bin/amazonmp3

libglademm-2.4.so.1: libglademm-2.4-1c2a

libgtkmm-2.4.so.1: libgtkmm-2.4-1c2a

libgiomm-2.4.so.1: libglibmm-2.4-1c2a

libgdkmm-2.4.so.1: libgtkmm-2.4-1c2a

libatkmm-1.6.so.1: libgtkmm-2.4-1c2a

libpangomm-1.4.so.1: libpangomm-1.4-1

libcairomm-1.0.so.1: libcairomm-1.0-1

libglibmm-2.4.so.1: libglibmm-2.4-1c2a

No match for libboost_filesystem-gcc42-1_34_1.so.1.34.1

No match for libboost_regex-gcc42-1_34_1.so.1.34.1

No match for libboost_date_time-gcc42-1_34_1.so.1.34.1

No match for libboost_signals-gcc42-1_34_1.so.1.34.1

No match for libboost_iostreams-gcc42-1_34_1.so.1.34.1

No match for libboost_thread-gcc42-mt-1_34_1.so.1.34.1

The following i386 packages will be installed:

libcairomm-1.0-1

libglademm-2.4-1c2a

libglibmm-2.4-1c2a

libgtkmm-2.4-1c2a

libpangomm-1.4-1

Continue [Y/n]?

Downloading ...

Installing libraries ...

3.1) If, like me, you got “no match for libboost” as above, or you get “amazonmp3: error while loading shared libraries: libboost_filesystem-gcc42-1_34_1.so.1.34.1: cannot open shared object file: No such file or directory” then do this bit:

sudo getlibs -w http://ftp.osuosl.org/pub/ubuntu/pool/universe/b/boost/libboost-date-time1.34.1_1.34.1-16ubuntu1_i386.deb

sudo getlibs -w http://ftp.osuosl.org/pub/ubuntu/pool/universe/b/boost/libboost-filesystem1.34.1_1.34.1-16ubuntu1_i386.deb

sudo getlibs -w http://ftp.osuosl.org/pub/ubuntu/pool/universe/b/boost/libboost-iostreams1.34.1_1.34.1-16ubuntu1_i386.deb

sudo getlibs -w http://ftp.osuosl.org/pub/ubuntu/pool/universe/b/boost/libboost-regex1.34.1_1.34.1-16ubuntu1_i386.deb

sudo getlibs -w http://ftp.osuosl.org/pub/ubuntu/pool/universe/b/boost/libboost-signals1.34.1_1.34.1-16ubuntu1_i386.deb

sudo getlibs -w http://ftp.osuosl.org/pub/ubuntu/pool/universe/b/boost/libboost-thread1.34.1_1.34.1-16ubuntu1_i386.deb

sudo getlibs -w http://ftp.osuosl.org/pub/ubuntu/pool/main/i/icu/libicu40_4.0.1-2ubuntu2_i386.deb

sudo ldconfig

4) Let the music play!

. . .

Q: What is getlibs?

A: “Copyright: 2007; Downloads 32-bit libraries on 32-bit and 64-bit systems. For use with Debian and Ubuntu.”

1 Comment

Link:

https://dannyman.toldme.com/2011/03/12/actiontec-tivo-wpa-wireless-error-n02/

So, I recently replaced my home router with an Actiontec GT724WGR. But I neglected to tell the TiVo, so it quietly started to lose programming data. Then I tried getting the TiVo to talk to the new router, but it wasn’t connecting. Long story short, it appears the TiVo is not supporting WPA2, so on the Actiontec, I went in to Wireless Setup > Advanced Settings and kicked it down to WPA and now the TiVo is updating its information successfully.

But it took some doing to figure that out. It would be helpful if the TiVo N02 error page touched upon the limitations of TiVo’s support for wireless security.

Feedback Welcome

Link:

https://dannyman.toldme.com/2011/02/04/new-perspective/

That “Sea of Glass” building from a different perspective.

While clicking around in a WordPress install last night I discovered that under Appearance > Media I can change the size of images posted through WordPress.

Feedback Welcome

Link:

https://dannyman.toldme.com/2011/01/20/g2-vs-g1/

In a nutshell . . .

The G2 is fast as heck. It has all the cool new Android apps, and T-Mobile let’s you do tethering out of the box. We moved our apartment last month and setting up a wireless access point on my phone was braindead easy and plenty fast while we waited for the DSL installation. Everything works faster, and the battery life is better to boot.

The keyboards has a generally nice feel to it. But . . .

The biggest drawback is the lack of a number row on the keyboard. Really irritating to have to press ALT to type numbers. Entering “special” characters is a bitch-and-a-half. For example, to type a < you have to type ALT-ALT-long-press-j. WTF? Also, I miss the scroll wheel. There is a button on the phone that sometimes-but-not-always works as a directional pad to surf through a text field but I have learned to stab my thumb at the screen until I manage to land the cursor where I want it. (What I really miss is the Sidekick 2 direction pad.)

It is a very very nice phone with a short list of dumb shortcomings.

Feedback Welcome

Link:

https://dannyman.toldme.com/2011/01/05/how-i-automate/

ACM has a nice article on “soft skills” for Systems Administrators. I’m digging on their advice for automation:

Automation saves time both by getting tasks done more quickly and by ensuring consistency, thus reducing support calls.

Start with a script that outputs the commands that would do the task. The SA can review the commands for correctness, edit them for special cases, and then paste them to the command line. Writing such scripts is usually easier than automating the entire process and can be a stepping stone to further automation of the process.

A simple script that assists with the common case may be more valuable than a large system that automates every possible aspect of a task. Automate the 80 percent that is easy and save the special cases for the next version. Document which cases require manual handling, and what needs to be done.

There have been times in my career when I have felt that people look at automation as a one-off task. “Write a script to automate this task.” Other times I have been asked how I go about automating things, and my answer is that automation isn’t a task so much as an iterative process:

- I try to do the task at least once, maybe a few times.

- Along the way I document what I had to do to get the job done.

- From there, I follow the documentation, and refine edge cases as I go.

- After that I’ll write a script, and get it working. (do)

- I revise the documentation to explain how to use the script. (document)

- And then, I use the script to complete requests, fixing the script when it fails. (refine)

Often enough I have been called upon to help another group automate something. That is a little trickier because I may never get the chance to do the task. Hopefully the other group has written some documentation, otherwise I’ll have to tease it out of them. The whole refinement process is the most obviously collaborative. I’ll document “use the script . . . it should do this . . . if it does something else, send me details.”

There is also the question of what-is-worth-automating. I believe it is the “Practice of System and Network Administration” which breaks tasks in to four buckets: frequent-easy, frequent-difficult, infrequent-easy, infrequent-difficult. You get the most payoff by focusing your automation on the frequent tasks. Easier tasks are generally easier to automate, so go ahead and start there, then turn your focus on the frequent-yet-difficult tasks. If you regard automation as an iterative process, then infrequent tasks are that much harder to automate. This is doubly true when the task is sufficiently infrequent that the systems have a chance to evolve between task execution. Infrequent tasks tend to be adequately served by well-maintained documentation in lieu of an automated process.

A last note for infrequent tasks. Part of the difficulty for these can be a combination of remembering to do them, and finding the correct documentation. One approach to “automating” an infrequent task would be to write a script that files a request to complete the task. This request should of course include a pointer to the documentation. For example, I have a cron job which sends me an e-mail to complete a monthly off-site backup for my personal web site. The e-mail contains the list of commands I need to run. (And yes, the daily local database backups are executed automatically.)

2 Comments

Link:

https://dannyman.toldme.com/2010/12/30/skype-failure-as-economic-parable/

Several leading institutions fail, leading consumers to a rush on the remaining institutions, causing them to fail. A cascade of failures brings the whole system crashing down until the central authority undertakes a massive, unprecedented intervention to bring the system back to normal. At first, the degree of central intervention required is underestimated, but in time sufficient resources are brought to bear and the complex system recovers.

In the space of twenty-four hours.

Interestingly, Skype’s network is actually a peer-to-peer network. It is a complex system which normally proves highly resilient, with in-built safety mechanisms to contain failure and ensure reliability. But under the right circumstances, failure can cascade. I couldn’t help but read that as a metaphor for free-market economics, which can usually take care of itself, but will enter a fugue state often enough to require a strong authority to intervene and put it right.

As a SysAdmin, the Skype network sounds like a very interesting beast. I figure that an action item against a future failure might be to provide a “central reserve bank” that monitors the health of so-called supernodes and automatically fires up large numbers of the dedicated mega-supernodes in the event of a widespread failure. (And such a strategy could well exacerbate some other unanticipated failure mode.)

1 Comment

Link:

https://dannyman.toldme.com/2010/12/23/swa-yahoo-being-evil/

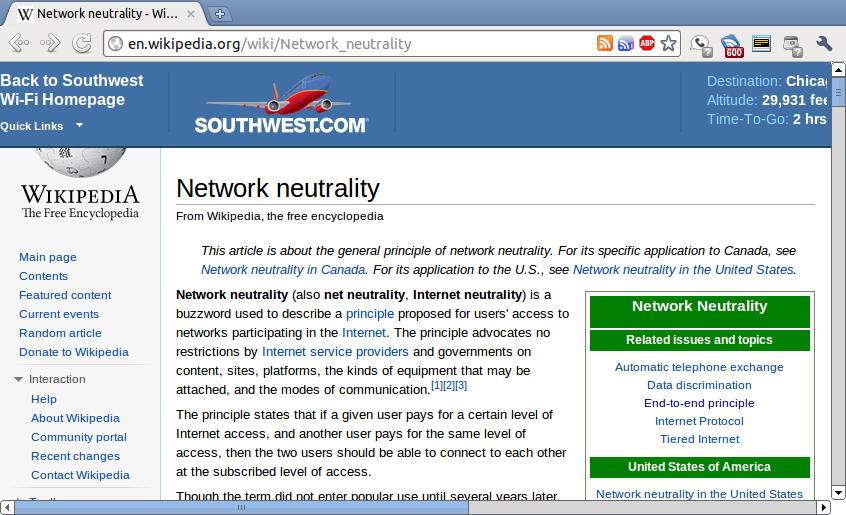

Good news! Southwest Airlines offers wifi on my flight! Only $5 introductory price! I have to try this out!

The service is “designed by Yahoo!”

It is kind of really really slow to make connections.

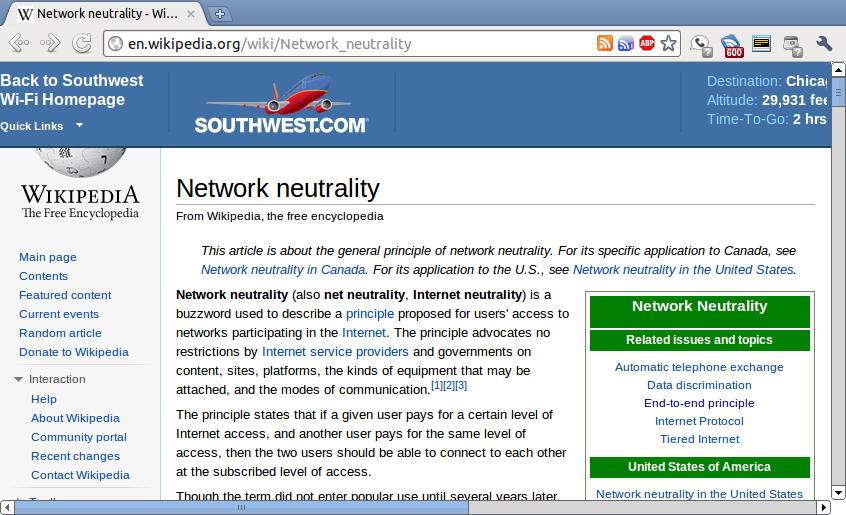

Wait . . . WTF is this?!!

Yup. Southwest Airlines wifi does HTTP session hijacking to inject content in to your web pages.

This is a perfect illustration of the need for net neutrality: your Internet Service Provider should not interfere with your ability to surf web pages. This would be comparable to your phone company interrupting your telephone calls with commercials. Outrageous! Wrong! Bad!!

(On Mei’s computer there are actual ads in the blue bar on top, but my AdBlock plugin filters those.)

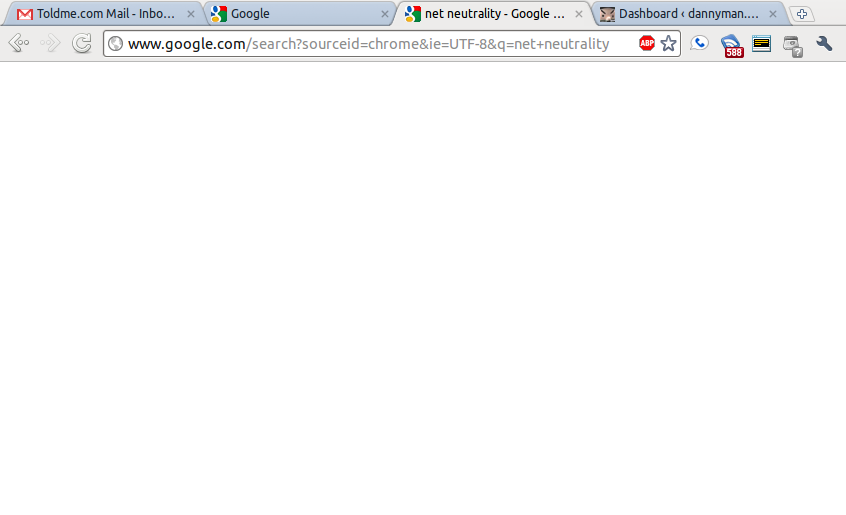

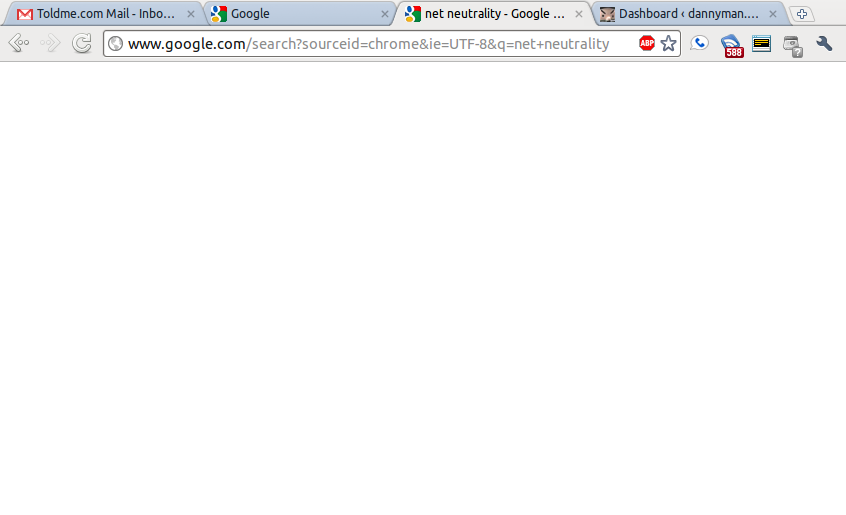

It gets worse from there. On the “designed by Yahoo!” experience you can surf on over to Yahoo! just fine. But I’m a Google man. Here’s what Google looks like:

Work-around #1: On sites that support them, use HTTPS URLs. Those are encrypted, so they can’t be hijacked. So, where http://www.google.com/ fails, https://www.google.com/ gets through!

But my little WordPress blog lacks fancy-pants HTTPS. And the session hijacking breaks my ability to post.

Work-around #2: If you have a remote shell account, a simple ssh -D 8080 will set up a SOCKS proxy, and you can tell your web browser to use SOCKS proxy localhost:8080 . . . now you are routing through an encrypted connection: no hijacking!

Update: they charge is $5/segment, so $10 if your plane stops in Las Vegas, and you get to type your credit card number a second time. Though, on the second segment, Google loads okay, but I still had to route through the proxy because the magic header was blocking WordPress’ media interface.

Update: holy packet loss, Batman!

0-20:20 dannhowa@dannhowa-w510 ~$ ping -qc 10 www.yahoo.com

PING any-fp.wa1.b.yahoo.com (72.30.2.43) 56(84) bytes of data.

--- any-fp.wa1.b.yahoo.com ping statistics ---

10 packets transmitted, 8 received, 20% packet loss, time 10011ms

rtt min/avg/max/mdev = 805.813/1669.936/3452.445/936.527 ms, pipe 4

0-20:21 dannhowa@dannhowa-w510 ~$ ping -qc 10 www.google.com

PING www.l.google.com (74.125.19.99) 56(84) bytes of data.

--- www.l.google.com ping statistics ---

10 packets transmitted, 6 received, 40% packet loss, time 11460ms

rtt min/avg/max/mdev = 661.391/2203.774/4022.638/1383.736 ms, pipe 5

At least they aren’t discriminating at the packet level.

Update: it sucked less later on, but still incredible latency:

0-21:07 dannhowa@dannhowa-w510 ~$ ping -qc 10 www.yahoo.com && ping -qc 10 www.google.com

PING any-fp.wa1.b.yahoo.com (98.137.149.56) 56(84) bytes of data.

--- any-fp.wa1.b.yahoo.com ping statistics ---

10 packets transmitted, 8 received, 20% packet loss, time 8998ms

rtt min/avg/max/mdev = 699.470/1023.412/2003.447/481.359 ms, pipe 3

PING www.l.google.com (74.125.19.147) 56(84) bytes of data.

--- www.l.google.com ping statistics ---

10 packets transmitted, 8 received, 20% packet loss, time 9003ms

rtt min/avg/max/mdev = 690.500/1201.541/2052.341/483.891 ms, pipe 3

The Gogo Wireless on Virgin America always worked way better than this, and Google covers the cost over the holidays. And as far as I know: no session hijacking!

5 Comments

Link:

https://dannyman.toldme.com/2010/12/02/virtualization-blessing-or-curse/

I saw this float across my Google Reader yesterday, thanks to Tom Limoncelli. If you are a sysadmin in an environment fixing to do more virtualization, it is well worth a skim:

Virtualization: Blessing or Curse?

NOTE: this isn’t an anti-virtualization rant, more of a “things to watch out for” briefing.

Some of my take-aways:

- Sure we’ll have fewer physical servers, but the number of deployed systems will grow more quickly. As that grows so too will our systems management burden.

- As the system count grows faster, we may hit capacity chokepoints on internal infrastructure like monitoring, trending, log analysis, DHCP or DNS faster than previously assumed.

- Troubleshooting becomes more complex: is your slow disk access an application, OS, or hardware issue becomes also a potential host OS, networking/SAN or filer issue as well.

- Regarding troubleshooting: we may add another team to the mix (to manage virtualization) while trouble-shooting has an increased probability of requiring cooperation across multiple teams to pin down. Increased importance on our ability to cooperate across teams.

- Change management impacts: small changes against a larger number of systems sharing architecture snowball even more. One can add something to the base image that increases disk use by 1% for any one system, but multiply that across all your systems and you have a big new load on your filer. (1,000 butterflies flapping their wings.)

- Reduced fault isolation: as we have greater ability to inadvertently magnify increased load and swamp network and storage infrastructure, we have a greater ability to impact the performance of unrelated systems which share that infrastructure.

- The article also cautions against relying on vendor-provided GUIs because they don’t scale as well as a good management and automation framework.

Ah, the other thing noteworthy there is the ACM Queue magazine is now including articles on systems administration. (I subscribed to the system administration feed.)

Feedback Welcome

« Newer Stuff . . . Older Stuff »

Site Archive