Latency Goggles for Linux

While diagnosing why an internal web site is slow for some users, I got data that our overseas colleagues see ping latency to the web site of around 200 ms. This is not unreasonable. Some web sites that attach a lot of additional objects cause remote clients to have to open several connections and make several round-trips to load and render a web page. What might work fine at 20 ms latency can really drag at 200 ms.

How to test this out? As a Linux user, I can use netem to induce added latency on my network interface:

# ping -qc 2 google.com PING google.com (74.125.224.145) 56(84) bytes of data. --- google.com ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 5009ms rtt min/avg/max/mdev = 4.136/4.197/4.258/0.061 ms # tc qdisc add dev wlan0 root netem delay 200ms # ping -qc 2 google.com PING google.com (74.125.224.144) 56(84) bytes of data. --- google.com ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 5474ms rtt min/avg/max/mdev = 205.006/205.034/205.062/0.028 ms # tc qdisc change dev wlan0 root netem delay 0ms # ping -qc 2 google.com PING google.com (74.125.224.50) 56(84) bytes of data. --- google.com ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 5011ms rtt min/avg/max/mdev = 4.117/4.182/4.248/0.092 ms

Note, I’m on wireless, so I’m tuning wlan0. you’ll want to hit eth0 or whatever is appropriate to your configuration.

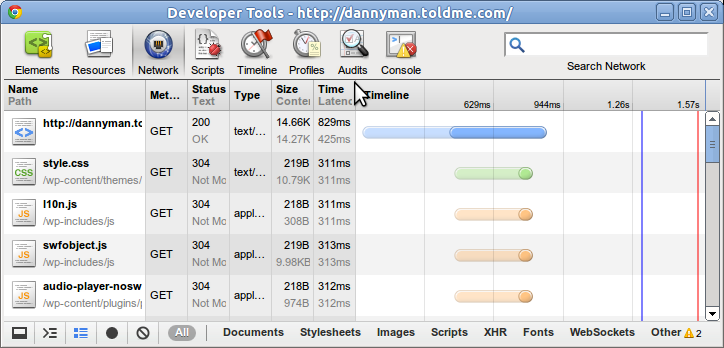

The YSlow plugin or the Google Chrome Developer tool Network tab can be helpful to see what is going on:

So, with my web site, an added 200 ms latency doubles total page load time from 0.8 seconds to 1.6 seconds.

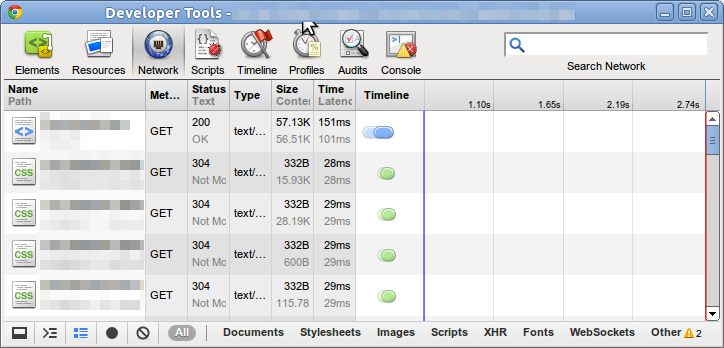

Here’s what I see when I visit the problem web site:

Total page load time at 7.5 seconds is nearly three times slower than without latency.

A very crude way to measure things is with wget on the command-line.

The wget man page mentions the -p (page requisites) option, then the author suggests wget -E -H -k -K -p <URL>. (You’ll need to RTFM yourself…) So I do:

$ cd /tmp $ sudo tc qdisc change dev wlan0 root netem delay 0ms $ time wget -q -E -H -k -K -p http://google.com real 0m0.160s user 0m0.010s sys 0m0.000s $ sudo tc qdisc change dev wlan0 root netem delay 200ms $ time wget -q -E -H -k -K -p http://google.com real 0m3.832s user 0m0.010s sys 0m0.000s

Of course, even with all those options, wget behaves very differently from a modern GUI web browser: there’s no caching, it doesn’t parse the DOM and it will blindly download requisites it doesn’t actually need. (Even a large font file found in a CSS comment.) And it does all its requests serially, whereas a modern GUI web browser will fetch several objects in parallel. And whatever web browser you use over a connection with induced latency is not going to replicate the experience of remote users pulling page requisites from zippy local CDNs.

At the end of the day, I proposed the following advice to my remote colleagues:

- Try tuning the web browser to use more concurrent TCP connections.

- Try modifying browser behavior: middle-click faster-loading sub-pages in to new tabs, work on those tabs, then refer back to the slower-loading “dashboard” screen, reloading only when needed.

I also tweaked the web application to make it possible to show a more lightweight “dashboard” screen with fewer objects hanging off of it. This seems to improve load time on that page about 50%.