Link:

https://dannyman.toldme.com/2016/02/09/tech-tip-self-documenting-config-files/

One of my personal “best practices” is to leave myself and my colleagues hints as to how to get the job done. Plenty of folks may be aware that they need to edit /etc/exports to add a client to an NFS server. I would guess that the filename and convention is decades old, but who among us, even the full-time Unix guy, recalls that you then need to reload the nfs-kernel-server process?

For example:

0-11:04 djh@fs0 ~$ head -7 /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# ***** HINT: After you edit this file, do: *****

# sudo service nfs-kernel-server reload

# ***** HINT: run the command on the previous line! *****

#

Feedback Welcome

Link:

https://dannyman.toldme.com/2016/01/02/windows-10/

The other day I figured to browse Best Buy. I spied a 15″ Toshiba laptop, the kind that can pivot the screen 180 degrees into a tablet. With a full sized keyboard. And a 4k screen. And 12GB of RAM. For $1,000. The catch? A non-SSD 1TB hard drive and stock graphics. And … Windows 10.

But it appealed to me because I’ve been thinking I want a computer I can use on the couch. My home workstation is very nice, a desktop with a 4k screen, but it is very much a workstation. Especially because of the 4k screen it is poorly suited to sitting back and browsing … so, I went home, thought on it over dinner, then drove back to the store and bought a toy. (Oh boy! Oh boy!!)

Every few years I flirt with Microsoft stuff — trying to prove that despite the fact I’m a Unix guy I still have an open mind. I almost usually throw up my hands in exasperation after a few weeks. The only time I ever sort of appreciated Microsoft was around the Windows XP days, it was a pretty decent OS managing folders full of pictures. A lot nicer than OS X, anyway.

This time, out of the gate, Windows 10 was a dog. The non-SSD hard drive slowed things down a great deal. Once I got up and running though, it isn’t bad. It took a little getting used to the sluggishness — a combination of my adapting to the trackpad mouse thing and I swear that under load the Windows UI is less responsive than what I’m used to. The 4k stuff works reasonably well … a lot of apps are just transparently pixel-doubled, which isn’t always pretty but it beats squinting. I can flip the thing around into a landscape tablet — which is kind of nice, though, given its size, a bit awkward — for reading. I can tap the screen or pinch around to zoom text. The UI, so far, is back to the good old Windows-and-Icons stuff old-timers like me are used to.

Mind you, I haven’t tried anything as nutty as setting up OpenVPN to auto-launch on user login. Trying to make that happen for one of my users at work on Windows 8 left me twitchy for weeks afterward.

Anyway, a little bit of time will tell .. I have until January 15 to make a return. The use case is web browsing, maybe some gaming, and sorting photos which are synced via Dropbox. This will likely do the trick. As a little bonus, McAfee anti-virus is paid for for the first year!

I did try Ubuntu, though. Despite UEFI and all the secure boot crud, Ubuntu 15.10 managed the install like it was nothing, re-sizing the hard drive and all. No driver issues … touchscreen even worked. Nice! Normally, I hate Unity, but it is okay for a casual computing environment. Unlike Windows 10, though, I can’t three-finger-swipe-up to show all the windows. Windows+W will do that but really … and I couldn’t figure out how to get “middle mouse button” working on the track pad. For me, probably 70% of why I like Unix as an interface is the ease of copy-paste.

But things got really dark when I tried to try KDE and XFCE. Installing either kubuntu-desktop or xubuntu-desktop actually made the computer unusable. The first had a weird package conflict that caused X to just not display at all. I had to boot into safe mode and manually remove the kubuntu dependencies. The XFCE was slightly less traumatic: it just broke all the window managers in weird ways until I again figured out how to manually remove the dependencies.

It is just as easy to pull up a Terminal on Windows 10 or Ubuntu … you hit Start and type “term” but Windows 10 doesn’t come with an SSH client, which is all I really ask. From what I can tell, my old friend PuTTY is still the State of the Art. It is like the 1990s never died.

Ah, and out of the gate, Windows 10 allows you multiple desktops. Looks similar to Mac. I haven’t really played with it but it is a heartening sign.

And the Toshiba is nice. If I return it I think I’ll look for something with a matte screen and maybe actual buttons around the track pad so that if I do Unix it up, I can middle-click. Oh, and maybe an SSD and nicer graphics … but you can always upgrade the hard drive after the fact. I prefer matte screens, and being a touch screen means this thing hoovers up fingerprints faster than you can say chamois.

Maybe I’ll try FreeBSD on the Linux partition. See how a very old friend fares on this new toy. :)

Feedback Welcome

Link:

https://dannyman.toldme.com/2015/11/11/variability-of-hard-drive-speed/

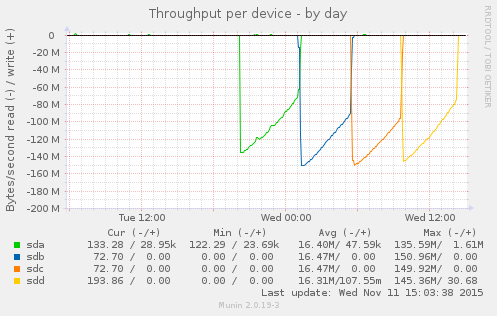

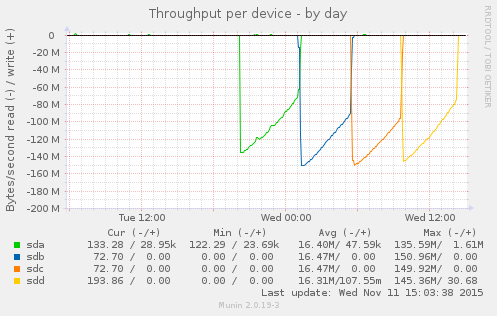

Munin gives me this beautiful graph:

This is the result of:

$ for s in a b c d; do echo ; echo sd${s} ; sudo dd if=/dev/sd${s} of=/dev/null; done

sda

3907029168+0 records in

3907029168+0 records out

2000398934016 bytes (2.0 TB) copied, 17940.3 s, 112 MB/s

sdb

3907029168+0 records in

3907029168+0 records out

2000398934016 bytes (2.0 TB) copied, 15457.9 s, 129 MB/s

sdc

3907029168+0 records in

3907029168+0 records out

2000398934016 bytes (2.0 TB) copied, 15119.4 s, 132 MB/s

sdd

3907029168+0 records in

3907029168+0 records out

2000398934016 bytes (2.0 TB) copied, 16689.7 s, 120 MB/s

The back story is that I had a system with two bad disks, which seems a little weird. I replaced the disks and I am trying to kick some tires before I put the system back into service. The loop above says “read each disk in turn in its entirety.” Prior to replacing the disks, a loop like the above would cause the bad disks, sdb, and sdc, to abort read before completing the process.

The disks in this system are 2TB 7200RPM SATA drives. sda and sdd are Western Digital, while sdb and sdc are HGST. This is in no way intended as a benchmark, but what I appreciate is the consistent pattern across the disks: throughput starts high and then gradually drops over time. What is going on? Well, these are platters of magnetic discs spinning at a constant speed. When you read a track on the outer part of the platter, you get more data than when you read from closer to the center.

I appreciate the clean visual illustration of this principle. On the compute cluster, I have noticed that we are more likely to hit performance issues when storage capacity gets tight. I had some old knowledge from FreeBSD that at a certain threshold, the OS optimizes disk writes for storage versus speed. I don’t know if Linux / ext4 operates that way. It is reassuring to understand that, due to the physical properties, traditional hard drives slow down as they fill up.

Feedback Welcome

Link:

https://dannyman.toldme.com/2015/10/29/it-strategy-lose-the-religion/

High Scalability asks “What Ideas in IT Must Die?” My own response . . .

I have been loath to embrace containers, especially since I attended a conference that was supposed to be about DevOps but was 90% about all the various projects around Docker and the like. I worked enough with Jails in the past two decades to feel exasperation at the fervent religious belief of the advantages of reinventing an old wheel.

I attended a presentation about Kubernetes yesterday. Kubernetes is an orchestration tool for containers that sounds like a skin condition, but I try to keep an open mind. “Watch how fast I can re-allocate and scale my compute resources!” Well, I can do that more slowly but conveniently enough with my VM and config management tools . . .

. . . but I do see potential utility in that containers could offer a simpler deployment process for my devs.

There was an undercurrent there that Kubernetes is the Great New Religion that Will Unify All the Things. I used to embrace ideas like that, then I got really turned off by thinking like that, and now I know enough to see through the True Beliefs. I could deploy Kubernetes as an offering of my IT “Service Catalog” as a complimentary option versus the bare metal, hadoop clusters, VM, and other services I have to offer. It is not a Winner Take All play, but an option that could improve productivity for some of our application deployment needs.

At the end of the day, as an IT Guy, I need to be a good aggregator, offering my users a range of solutions and helping them adopt more useful tools for their needs. My metrics for success are whether or not my solutions work for my users, whether they further the mission of my enterprise, and whether they are cost-effective, in terms of time and money.

Feedback Welcome

Link:

https://dannyman.toldme.com/2015/06/24/one-on-ones/

Early in my career, I didn’t interact much with management. For the past decade, the companies I have worked at had regular one-on-one meetings with my immediate manager. At the end of my tenure at Cisco, thanks to a growing rapport and adjacent cubicles, I communicated with my manager several times a day, on all manner of topics.

One of the nagging questions I’ve never really asked myself is: what is the point of a one-on-one? I never really looked at it beyond being a thing managers are told to do, a minor tax on my time. At Cisco, I found value in harvesting bits of gossip as to what was going in the levels of management between me and the CEO.

Ben Horowitz has a good piece on his blog. In his view, the one-on-one is an important end point of an effective communication architecture within the company. The employee should drive the agenda, perhaps to the point of providing a written agenda ahead of time. “This is what is on my mind,” giving management an opportunity to listen, refine strategies, clarify expectations, un-block, and provide insight up the management chain. He suggests some questions to help get introverted employees talking.

I am not a manager, but as an employee, the take-away is the need to conjure an agenda: what is working? What is not working? How can we make not merely the technology, but the way we work as a team and a company, more effective?

Feedback Welcome

Link:

https://dannyman.toldme.com/2015/04/23/virsh-migrate-local-storage-live/

In my previous post I lamented that it seemed I could not migrate a VM between virtual hosts without shared storage. This is incorrect. I have just completed a test run based on an old mailing list message:

ServerA> virsh -c qemu:///system list

Id Name State

----------------------------------------------------

5 testvm0 running

ServerA> virsh -c qemu+ssh://ServerB list # Test connection to ServerB

Id Name State

----------------------------------------------------

The real trick is to work around a bug whereby the target disk needs to be created. So, on ServerB, I created an empty 8G disk image:

ServerB> sudo dd if=/dev/zero of=/var/lib/libvirt/images/testvm0.img bs=1 count=0 seek=8G

Or, if you’re into backticks and remote pipes:

ServerB> sudo dd if=/dev/zero of=/var/lib/libvirt/images/testvm0.img bs=1 count=0 seek=`ssh ServerA ls -l /var/lib/libvirt/images/testvm0.img | awk '{print $5}'`

Now, we can cook with propane:

ServerA> virsh -c qemu:///system migrate --live --copy-storage-all --persistent --undefinesource testvm0 qemu+ssh://ServerB/system

This will take some time. I used virt-manager to attach to testvm0’s console and ran a ping test. At the end of the procedure, virt-manager lost console. I reconnected via ServerB and found that the VM hadn’t missed a ping.

And now:

ServerA> virsh -c qemu+ssh://ServerB/system list

Id Name State

----------------------------------------------------

4 testvm0 running

Admittedly, the need to manually create the target disk is a little more janky than I’d like, but this is definitely a nice proof of concept and another nail in the coffin of NAS being a hard dependency for VM infrastructure. Any day you can kiss expensive, proprietary SPOF dependencies goodbye is a good day.

Feedback Welcome

Link:

https://dannyman.toldme.com/2015/04/22/virt-manager-hell-yeah/

I may have just had a revelation.

I inherited a bunch of ProxMox. It is a rather nice, freemium (nagware) front-end to virtualization in Linux. One of my frustrations is that the local NAS is pretty weak, so we mostly run VMs on local disk. That compounds with another frustration where ProxMox doesn’t let you build local RAID on the VM hosts. That is especially sad because it is based on Debian and at least with Ubuntu, building software RAID at boot is really easy. If only I could easily manage my VMs on Ubuntu . . .

Well, turns out, we just shake the box:

On one or more VM hosts, check if your kernel is ready:

sudo apt-get install cpu-checker

kvm-ok

Then, install KVM and libvirt: (and give your user access..)

sudo apt-get install qemu-kvm libvirt-bin ubuntu-vm-builder bridge-utils

sudo adduser `id -un` libvirtd

Log back in, verify installation:

virsh -c qemu:///system list

And then, on your local Ubuntu workstation: (you are a SysAdmin, right?)

sudo apt-get install virt-manager

Then, upon running virt-manager, you can connect to the remote host(s) via SSH, and, whee! Full console access! So far the only kink I have had to iron is that for guest PXE boot you need to switch Source device to vtap. The system also supports live migration but that looks like it depends on a shared network filesystem. More to explore.

UPDATE: You CAN live migrate between local filesystems!

It will get more interesting to look at how hard it is to migrate VMs from ProxMox to KVM+libvirt.

Feedback Welcome

Link:

https://dannyman.toldme.com/2015/04/08/aws-melt-glaciers-slowly/

I have been working with AWS to automate disaster recovery. Sync data up to S3 buckets (or, sometimes, EBS) and then write Ansible scripts to deploy a bunch of EC2 instances, restore the data, configure the systems.

Restoring data from Glacier is kind of a pain to automate. You have to iterate over the items in a bucket and issue restore requests for each item. But it gets more exciting than that on the billing end: Glacier restores can be crazy expensive!

A few things I learned this week:

1) Amazon Glacier restore fees are based on how quickly you want to restore your data. You can restore up to 5% of your total S3 storage on a given day for free. If you restore more than that they start to charge you and at the end of the month you’re confused by the $,$$$ bill.

2) Amazon Glacier will also charge you money if you delete data that hasn’t been in there for at least three months. If you Glacier something, you will pay to store it for at least three months. So, Glacier your archive data, but for something like a rolling backup, no Glacier.

3) When you get a $,$$$ bill one month because you were naive, file a support request and they can get you some money refunded.

Feedback Welcome

Link:

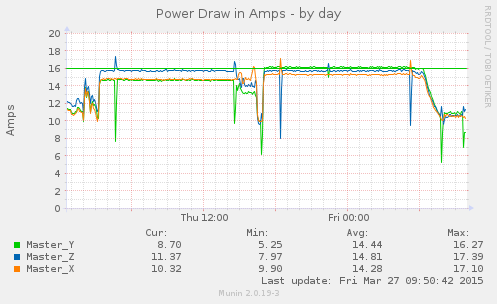

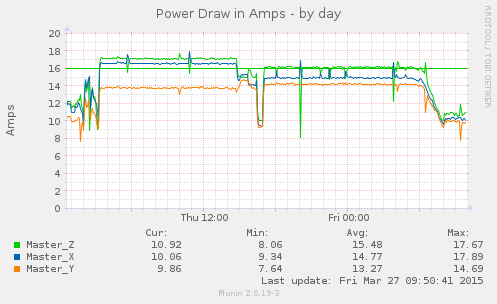

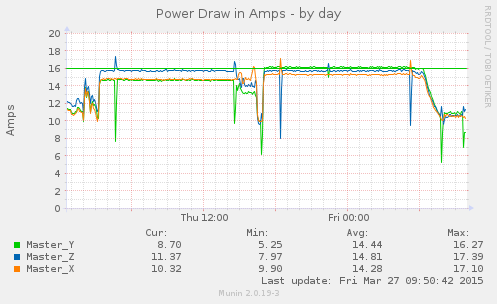

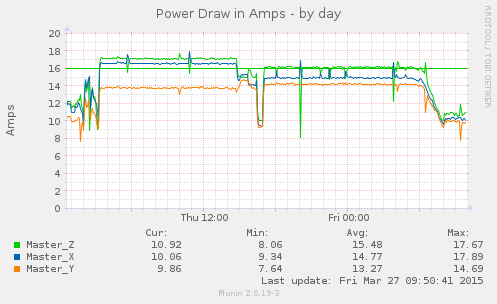

https://dannyman.toldme.com/2015/03/27/balancing-3-phase-power/

I have some racks in a data center that were designed to use 3-phase PDUs. This allows for greater density, since a 3-phase circuit delivers more watts. The way three-phase works is that the PDU breaks the circuit into three branches, and each branch is split across two of the legs. Something like:

Branch A: leg X->Y

Branch B: leg Y->Z

Branch C: leg Z->X

The load needs to be balanced as evenly as possible across the three branches. When the PDU tells me the power draw on Branch A is say, 3A, I am really sure if that is because of equipment on Branch A or … Branch C? This only becomes a problem when one branch reports chronic overloading and I want to balance the loads.

I chatted with a friend who has a PhD in this stuff. He said that if my servers don’t all have similar characteristics, then the math says I want to randomly distribute the load. That is hard to do on an existing rack.

I came up with an alternative approach. Most of my servers fall into clusters of similar hardware and what I would call a “load profile.” I had a rack powered by two unbalanced 3-phase circuits. I counted through the rack’s server inventory and classified each host into a series of cohorts based on their hardware and load profile. Two three-phase circuits gives me six branches to work with, so I divided the total by six to come up with a “branch quota.” Something like:

<= 2 hadoop node, hardware type A

<= 2 hadoop node, hardware type B

1 spark node, hardware type A

1 spark node, hardware type C

1 misc node, hardware type C

I then sat down with a pad and paper, one circuit on either side of the sheet, and wrote down what servers I had, and where the circuit was relative to quota. So, one circuit might have started off as:

Circuit A, Branch XY:

INVENTORY

hadoopA-12

hadoopA-13

hadoopA-14

sparkA-5

miscC-5

QUOTA

hadoopA: RM 1

hadoopB: ADD 2

sparkA: --

sparkC: ADD 1

misc: --

I then moved servers around, updating each branch circuit with pencil and eraser as I went, like a diligent Dungeon Master. (And also, very important: the PDU receptacle configuration.)

The end result:

I started moving servers around Thu 15:00, and was done about 16:30, which is also when the hadoop cluster went idle. It kicked back up again at 17:00, and started spinning down around midnight.

What is important is to keep sustained load on the branches under the straight green line, which represents 80% of circuit capacity. You can see that on the left, especially the second circuit had two branches running “hot” and after the re-balancing the branch loads fly closer together, and top off at the green line.

Feedback Welcome

Link:

https://dannyman.toldme.com/2015/03/23/nagios-delay-warning-notifications/

I have a Nagios check to monitor the power being drawn through our PDUs. While setting all this up, I had to figure out alert threshholds. The convention for disk capacity is warn at 80%, critical at 90%. I also had this gem to work with:

National Electric Code requires that the continuous current drawn from a branch circuit not exceed 80% of the circuit’s maximum rating. “Continuous current” is any load sustained continuously for at least 3 hours.

(Thanks to mike Pennington, via http://serverfault.com/a/413307/72839)

So, I went with 80% warning, 90% critical.

Lately I have been getting a lot of warning notifications about circuits exceeding 80%. Ah, but the NEC says that is only a problem if they are at 80% for more than three hours. So, I dig through Nagios documentation and split my check out into two services:

define service{ # PDU load at 90% of circuit rating

use generic-service

hostgroup_name pdus

service_description Power Load Critical

notification_options c,u,r

check_command check_sentry

contact_groups admins

}

define service{ # PDU sustained load at 80% of circuit rating for 3 hours

use generic-service

hostgroup_name pdus

service_description Power Load High

notification_options w,r

first_notification_delay 180

check_command check_sentry

contact_groups admins

}

The first part limits regular notifications to critical alerts. In the second case, the first_notification_delay should cover the “don’t bug me unless it has been happening for three hours” caveat and I set that service to only notify on warnings and recovery.

Feedback Welcome

Link:

https://dannyman.toldme.com/2015/03/06/howto-program-agile/

There seems to be some backlash going on against the religion of “Agile Software Development” and it is best summarized by PragDave, reminding us that the “Agile Manifesto” first places “Individuals and interactions over processes and tools” — there are now a lot of Agile Processes and Tools which you can buy in to . . .

He then summarizes how to work agilely:

What to do:

Find out where you are

Take a small step towards your goal

Adjust your understanding based on what you learned

Repeat

How to do it:

When faced with two or more alternatives that deliver roughly the same value, take the path that makes future change easier.

Sounds like sensible advice. I think I’ll print that out and tape it on my display to help me keep focused.

Feedback Welcome

Link:

https://dannyman.toldme.com/2014/11/22/windows-8-wtf-microsoft/

I had the worst experience at work today: I had to prepare a computer for a new employee. That’s usually a pretty painless procedure, but this user was to be on Windows, and I had to … well, I had to call it quits after making only mediocre progress. This evening I checked online to make sure I’m not insane. A lot of people hate Windows 8, so I enjoyed clicking through a few reviews online, and then I just had to respond to Badger25’s review of Windows 8.1:

I think you are being way too easy on Windows 8.1 here, or at least insulting to the past. This isn’t a huge step backwards to the pre-Windows era: in DOS you could get things done! This is, if anything, a “Great Leap Forward” in which anything that smells of traditional ways of doing things has been purged in order to strengthen the purity of a failed ideology.

As far as boot speed, I was used to Windows XP booting in under five seconds. That was probably the first incarnation of Windows I enjoyed using. I just started setting up a Windows 8 workstation today for a business user and it is the most infuriatingly obtuse Operating System I have ever, in decades, had to deal with. (I am a Unix admin, so I’ve seen things….) This thing does NOT boot fast, or at least it does not reboot fast, because of all the updates which must be slowly applied.

Oddly enough, it seems that these days, the best computer UIs are offered by Linux distros, and they have weird gaps in usability, then Macs, then … I wouldn’t suggest Windows 8 on anyone except possibly those with physical or mental disabilities. Anyone who is used to DOING THINGS with computers is going to feel like they are using the computer with their head wrapped in a hefty bag. The thing could trigger panic attacks.

Monday is another day. I just hope the new employee doesn’t rage quit.

Feedback Welcome

Link:

https://dannyman.toldme.com/2014/10/07/ansible-set-conditional-handler/

I have a playbook which installs and configures NRPE. The packages and services are different on Red Hat versus Debian-based systems, but my site configuration is the same. I burnt a fair amount of time trying to figure out how to allow the configuration tasks to notify a single handler. The result looks something like:

# Debian or Ubuntu

- name: Ensure NRPE is installed on Debian or Ubuntu

when: ansible_pkg_mgr == 'apt'

apt: pkg=nagios-nrpe-server state=latest

- name: Set nrpe_handler to nagios-nrpe-server

when: ansible_pkg_mgr == 'apt'

set_fact: nrpe_handler='nagios-nrpe-server'

# RHEL or CentOS

- name: Ensure NRPE is installed on RHEL or CentOS

when: ansible_pkg_mgr == 'yum'

yum: pkg={{item}} state=latest

with_items:

- nagios-nrpe

- nagios-plugins-nrpe

- name: Set nrpe_handler to nrpe

when: ansible_pkg_mgr == 'yum'

set_fact: nrpe_handler='nrpe'

# Common

- name: Ensure NRPE will talk to Nagios Server

lineinfile: dest=/etc/nagios/nrpe.cfg regexp='^allowed_hosts=' line='allowed_hosts=nagios.domain.com'

notify:

- restart nrpe

### A few other common configuration settings ...

Then, over in the handlers file:

# Common

- name: restart nrpe

service: name={{nrpe_handler}} state=restarted

The trick boiled down to using the set_fact module.

Feedback Welcome

Link:

https://dannyman.toldme.com/2014/05/15/vmware-retina-thunderbolt-constant-resolution/

Apple ships some nice hardware, but the Mac OS is not my cup of tea. So, I run Ubuntu (kubuntu) within VMWare Fusion as my workstation. It has nice features like sharing the clipboard between host and guest, and the ability to share files to the guest. Yay.

At work, I have a Thunderbolt display, which is a very comfortable screen to work at. When I leave my desk, the VMWare guest transfers to the Retina display on my Mac. That is where the trouble starts. You can have VMWare give it less resolution or full Retina resolution, but in either case, the screen size changes and I have to move my windows around.

The fix?

1) In the guest OS, set the display size to: 2560×1440 (or whatever works for your favorite external screen …)

2) Configure VMWare, per https://communities.vmware.com/message/2342718

2.1) Edit Library/Preferences/VMware Fusion/preferences

Set these options:

pref.autoFitGuestToWindow = "FALSE"

pref.autoFitFullScreen = "stretchGuestToHost"

2.2) Suspend your VM and restart Fusion.

Now I can use Exposé to drag my VM between the Thunderbolt display and the Mac’s Retina display, and back again, and things are really comfortable.

The only limitation is that since the aspect ratios differ slightly, the Retina display shows my VM environment in a slight letterbox, but it is not all that obvious on a MacBook Pro.

Feedback Welcome

Link:

https://dannyman.toldme.com/2014/05/14/new-phishing-scam-call-toll-free-to-refresh-your-ip-address/

I reported the following to the FBI, to LogMeIn123.com, to Century Link, and to Bing, and now I’ll share the story with you.

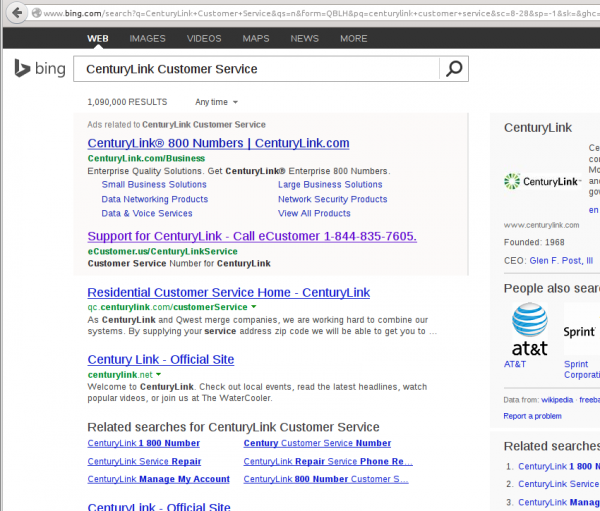

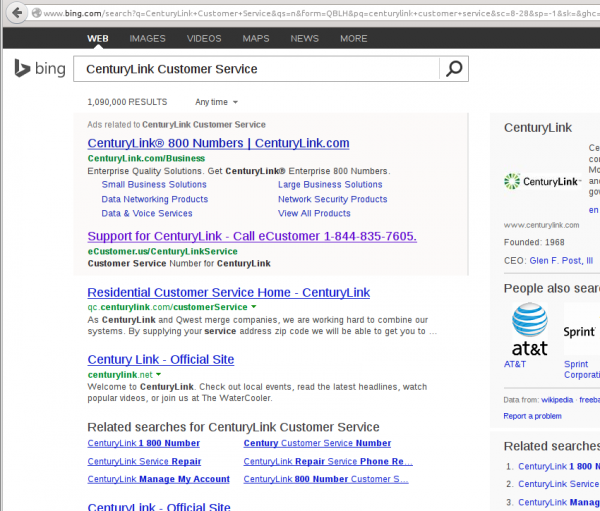

Yesterday, May 12, 2014, a relative was having trouble with Netflix. So she went to Bing and did a search for her ISP’s technical support:

Bing leads you to a convenient toll-free number to call for technical support!

She called the number: 844-835-7605 and spoke with a guy who had her go to LogMeIn123.com so he could fix her computer. He opened up something that revealed to her the presence of “foreign IP addresses” and then showed her the Wikipedia page for the Zeus Trojan Horse. He explained that she would need to refresh her IP address and that their Microsoft Certified Network Security whatevers could do it for $350 and they could take a personal check since her computer was infected and they couldn’t do a transaction online.

So, she conferenced me in. I said that she could just reinstall Windows, but he said no, as long as the IP was infected it would need to be refreshed. I said, well, what if we just destroyed the computer. No, no, the IP is infected. “An IP address is a number: how can it get infected?” I then explained that I was a network administrator . . . he said he would check with his manager. That was the last we heard from him.

I advised her that this sounded very very very much like a phishing scam and that she should call the telephone number on the bill from her ISP. She did that and they were very interested in her experience.

I was initially very worried that she had a virus that managed to fool her into calling a different number for her ISP. I followed up the next day, using similar software to VNC into her computer. I checked the browser history and found that the telephone number was right there in Bing for all the world to see. She doesn’t have a computer virus after all! (I’ll take a cloer look tonight . . .)

I submitted a report to the FBI, LogMeIn123.com, Bing, and Century Link. And now I share the story here. Its a phishing scam that doesn’t even require an actual computer virus to work!

Feedback Welcome

« Newer Stuff . . . Older Stuff »

Site Archive